the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Robot-assisted activities of daily living (ADL) rehabilitation training with a sense of presence based on virtual reality

Liaoyuan Li

Tianlong Lei

Jun Li

Xiangpan Li

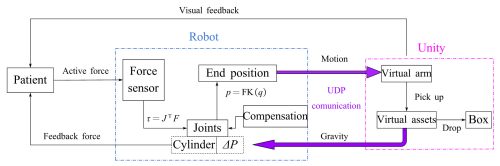

The integration of virtual reality (VR) technology with rehabilitation robots significantly improves rehabilitation training effectiveness. Although most studies employ VR games to engage patients, few explore activities of daily living (ADL) that incorporate visual, auditory, and force feedback. This article discusses the use of Unity software and a 4-degree-of-freedom upper-limb rehabilitation robot to design a picking and placing training exercise that provides a sense of presence. An inverse kinematics algorithm creates a virtual character to simulate realistic actions and perspective changes. Encouraging prompts and sounds motivate patients, while initial load and friction compensation renders the robot transparent. Virtual objects are assigned different masses, transmitted via the User Datagram Protocol (UDP) and converted into air pressure, allowing a cylinder to generate downward force, simulating gravity. Upper-limb electromyography (EMG) signals are collected during training. The experiment results show that air pressure increases within 1 s to provide a sensation of gravity. Picking up various objects generates distinct EMG signals, with amplitude and variation comparable to dumbbell experiments. This suggests that the proposed ADL training effectively exercises the joints and muscles of the upper extremities, providing high realism in vision and force perception.

- Article

(8311 KB) - Full-text XML

- BibTeX

- EndNote

Motor deficit of the upper extremities following a stroke is a prevalent symptom. Individuals who experience a stroke report the loss of upper-limb functioning to be a major disability (Feys et al., 1998). The impairment of the upper extremities affects the use of the limb and prevents the independent performance of activities of daily living (ADL) (Broeren et al., 2004). Recovery of the affected upper extremity depends on the regularity and intensity of training (Kim et al., 2022). There is a growing body of evidence that the paretic extremity must be repetitive, task-oriented, intensive, and motivating for neuroplasticity to occur (Nagappan et al., 2020).

Multi-channel stimulation and feedback are more conducive to rehabilitation training (Sigrist et al., 2013). Virtual reality (VR) technology provides the capability to create a virtual environment and allows users to receive sensory (e.g., visual and auditory) feedback (Nath et al., 2024) and is popular in the rehabilitation training process for visual and auditory feedback (Archambault et al., 2019; Goršic et al., 2020; Bortone et al., 2018). It is not only beneficial for rehabilitation training but also an attractive feature in the field of surgical operation simulation (Malukhin and Ehmann, 2018). A major advantage of VR interventions is that individuals regard such interventions as enjoyable exercise games rather than treatment, thereby increasing motivation and treatment compliance (Shin et al., 2014; Caldas et al., 2020; Norouzi-Gheidari et al., 2019). Through VR training, mirror neurons that increase their firing rates when an individual observes a particular movement being performed can induce cortical reorganization and contribute to functional recovery (Oztop et al., 2013). Research shows that VR can be a beneficial environment for rehabilitation (Triandafilou et al., 2018) and that relevant health games are promising tools for rehabilitation (Tao et al., 2021).

The direct purpose of rehabilitation training for patients is to restore their physical and cognitive abilities, but the ultimate goal is to return to society and family with the necessary social and family living abilities. ADL training is a type of occupation therapy (OT) that can stimulate patients' willingness to participate in training and has higher training effectiveness (Dobek et al., 2007) because OT training is a specific training based on patients' will to improve their abilities. The correlation between training repetition and improved motor ability based on OT therapy is higher than that with physical therapy (PT) (Huang et al., 2009).

1.1 Related work

The use of VR technology to achieve ADL training is a research hotspot. Head-mounted displays (HMDs) are used to implement virtual environments and daily actions, such as the act of pouring a drink and washing your face, are designed with game controllers (Razer Hydra sticks) and a mouse as control input devices (Gerber et al., 2018; Rojo et al., 2022; Chatterjee et al., 2022). The training utilizes a handle to control the movement direction of the virtual objects, only achieving unilateral data input from the physical system to the virtual system, with only visual feedback. Turolla et al. (2013) use the virtual reality rehabilitation system and a three-dimensional motion tracking system to study the effectiveness of virtual reality technology in rehabilitation training. However, the training scenes of the system are fixed and cannot dynamically adapt to the state of the trainer. In addition, the above methods do not combine virtual systems with rehabilitation training robots, so they can only provide visual feedback with limited motion range in the real world.

Some researchers have shown that using VR as an assistive feature in robot-assisted therapy (RAT) improves the entire recovery process of motor function (Ibrahim et al., 2022). The implementation of VR in RAT provides more focus, engagement, fun, and novelty to the rehabilitation process, resulting in better active participation (Dehem et al., 2019). However, rehabilitation equipment that only provides visual and auditory feedback cannot make patients fully immersed (Sveistrup, 2004). Meanwhile, clinical studies have also shown that implementing task-oriented exercises and multi-proprioceptive fusion training can enhance patients' ability to complete daily activities independently (Mostajeran et al., 2024). Force feedback is very important in addition to visual and auditory feedback, and it is an important means of forming a sense of presence. Force feedback in virtual games has been achieved using the PHANToM haptic interface device (Bardorfer et al., 2001). However, this device cannot perform large-scale training on the upper limbs and cannot meet the needs of ADL training. Marchal-Crespo et al. (2017) combined virtual reality to design a force feedback strategy based on position error. This strategy provides patients with three different force feedback modes – namely, no guidance, error reduction, and error amplification – while providing gravity and friction compensation. In the error reduction and error amplification modes, the controller provides the patient with a force that is the same as or opposite to the expected direction according to the tracking accuracy, and the magnitude of the force is related to the position error. After several trials, a customization force field is built to assist targeted reaching and circular movement, providing real-time visual feedback of the robot's end-effector position (Wright et al., 2017). Abdel Majeed et al. (2020) designed a viscous force field related to exercise speed based on clinical research results and studied the impact of speed changes on training effectiveness. The above force fields are relatively fixed and cannot be associated with activities of daily life without the fun of games. Compared to simple rehabilitation tasks, rehabilitation tasks that are similar to real-life scenarios require more complex brain activities (Di Diodato et al., 2007), which can provide more information to the brain and contribute to brain nerves.

1.2 Proposed method

In this study, a self-developed upper-limb rehabilitation robot is combined with the Unity3D software to complete the ADL training that is chosen as the pick up and place of objects. The system could provide visual, auditory, and force feedback with realistic virtual scenes which form an attractive and immersive training. It is suitable for stroke patients with certain strength in the upper limb. The remainder of this paper commences with Sect. 2, where the robot system and proposed method are introduced, including the construction of the virtual scene and the training strategy. Furthermore, Sect. 3 presents experimental verification results. Section 4 presents a discussion of the results, and the conclusions of this work are given in Sect. 5.

2.1 4-DOF robot for upper-limb rehabilitation

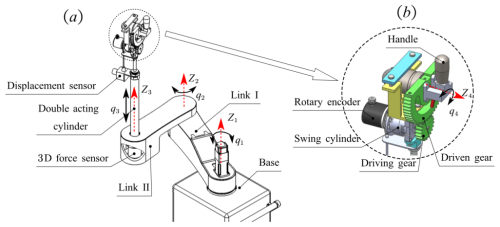

Figure 1 depicts the robot designed for ADL rehabilitation training. It is upgraded based on our previous 3-degree-of-freedom (DOF) training robot (Li et al., 2022). It has 4 DOFs, including three rotations (q1, q2, and q4), and one translational movement (q3). The axes of the joints are Z1, Z2, Z3, and Z4. The first two rotating joints in Fig. 1a are driven by two alternating current (AC) servo motors equipped with absolute encoders. They are in charge of the adduction and abduction of the shoulder and the flexion and extension of the elbow in the horizontal plane. A double-acting cylinder drives the vertical moving joint, which has a stroke of 150 mm. It is responsible for the flexion/extension of the shoulder in the sagittal plane. A displacement sensor is installed at the end of the cylinder. A three-dimensional force sensor connects Link II and the linear cylinder. In Fig. 1b, the fourth joint rotation is driven by a swing cylinder with gear reduction and recorded by a rotary encoder. By mounting a handle on the driven gear, it accounts for forearm rotation.

2.2 Virtual ADL scene

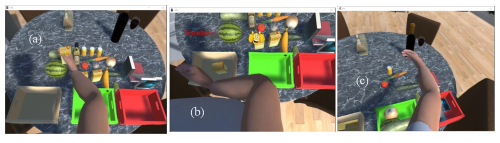

A daily-life scenario for picking up and organizing items was built in Unity software. The version of the Unity editor is 2021.1.1.0f1c1. The required daily items and models are then downloaded from the Unity Asset Store. The constructed virtual scene is shown in Fig. 2. There are items such as fruits, vegetables, beverages, and books on the desk. The training task is to control the virtual human upper limb to pick up different items and place them in three boxes on the desk. The virtual character is standing in a fixed position. By adding an inverse kinematics algorithm to the character, the upper limbs and body can be bent and extended when picking up objects. On the other hand, the vision of the virtual character also varies with the position of the right hand to ensure that objects and hands are always in the field of vision during training. Dynamic perspective changes make training more realistic and attractive.

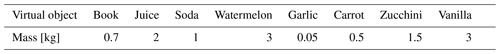

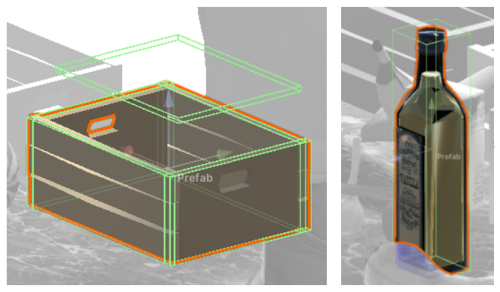

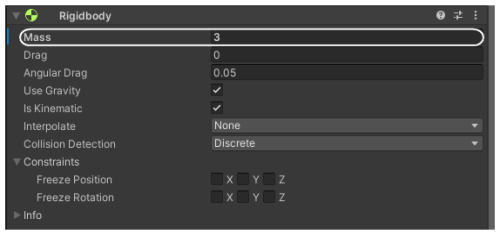

Add colliders to all objects that need to be picked up and the boxes, as shown in Fig. 3. The light-green wireframe in the figure is the collider. The rigid body component (Fig. 4) is added to each item with the Use Gravity and Is Kinematic options checked. Define different masses of the items, as shown in Table 1.

During training, if the subject successfully picks up the item and places it in the box, the system also provides positive feedback to motivate the subject to actively complete the training. For example, successful placement results in encouraging text such as “Excellent” and “You can do it”, as well as corresponding images. In addition, there is corresponding audio encouragement to provide visual and auditory stimulation to the subject.

2.3 Realization strategy

2.3.1 Connection between the robot and the virtual system

The virtual system should be connected to the rehabilitation robot platform, including data transmission and motion synchronization. The User Datagram Protocol (UDP) is used to achieve communication between the virtual system and the robot. UDP is a type of transport layer communication protocol. Like all network protocols, UDP is a standardized method for transferring data between two computers in a network. Compared to other protocols, UDP completes this process in a simple way: it directly sends data packets (units of data transmission) to the target computer without establishing a connection first, indicating the order of the data packets, or checking whether the data arrive as expected. Currently, most application processes still use Transmission Control Protocol (TCP) connections for client–server communication, but the UDP connection simplifies many aspects of design. In addition, the implementation is simpler, and due to the reduction of code, the process of debugging the application is much easier (Soundararajan et al., 2020).

The IP address of the secondary computer is 192.168.0.1. The port for sending data is 25001. The port to receive data is 23333. The IP address of the host computer is 192.168.0.2.

2.3.2 Transparency of the robot motion

The motion of the robot's end in x, y, and z directions controls the movement of the virtual human's right hand to pick up objects. The fourth joint of the robot controls the wrist flexion and extension motion to make the arm's posture more realistic. The picking and dropping of items rely on the collision detection function of the Unity system. It assigns the position of the hand to the object when the right hand collides with it, thus representing a picking-up effect. When an item touches a box, the position synchronization relationship between the item and the right hand is released, and then it falls into the box under gravity, indicating successful placement. Before picking up the object, the upper limb of the human could move with zero loads, so the subject could approach the object very easily even by dragging the robot as if the robot does not exist. This is called the transparency of the robot's motion. For this purpose, the friction forces of the robot's joints are tested and compensated, and the initial load in the vertical direction (such as the weight of the robot's end components and the weight of the human upper limbs) is also compensated. Additionally, the rotation friction of the first two joints is identified and compensated. The robot is then dragged by combining statics (Eq. 1) and the admittance algorithm (Eq. 2).

where τ is the torque of the joints, J is the Jacobian matrix, and F is the interactive force matrix.

where k is the admittance coefficient and Δq is the increment in the position of the joints.

2.3.3 Sense of presence for the subject

This training can enable the upper limbs to be trained in three-dimensional space. In addition to the visual feedback, using the weight of the object as vertical force feedback increases the participants' sense of presence, that is, to provide participants with a sense of force that is close to reality when picking up an object.

After successfully picking up the object, the program will obtain the mass of the picked object in real time and then convert it to gravity applied to the third joint of the robot so that the subject can feel the gravity of the object when dragging the third joint to move the object. The specific implementation method is to convert the gravity of the object into air pressure and apply it to the rod chamber of the linear cylinder, generating a downward force at the robot end to simulate the gravity of the object. The schematic diagram of the training strategy is shown in Fig. 5. The expression p=FK(q) stands for forward kinematics that computes the Cartesian coordinate p from the joint position q. After putting down the item, return to the initial state, and the entire process conforms to the sense of daily activity movement. The variation in pressure in the two chambers of the cylinder is based on Eqs. (3) and (4). Equation (3) indicates that before picking up an object, the pressure in the rodless chamber of the cylinder, denoted as P1, is used to compensate for the initial vertical load and friction. The pressure in the rod chamber, denoted as P2, is zero. At this point, the cylinder's displacement can be easily controlled to reach the object. Equation (4) describes the change in pressure in the two chambers of the cylinder after the object is picked up. The pressure in the rod chamber, P2, is mainly determined by the gravitational force of the picked object, denoted as Gv, providing the patient with a sense of weight.

where Pinitial load represents the pressure required to compensate for the initial vertical load and Pfriction represents the pressure required to compensate for friction.

where Gv represents the gravitational force of the picked-up object and A2 is the area of the rod chamber of the cylinder.

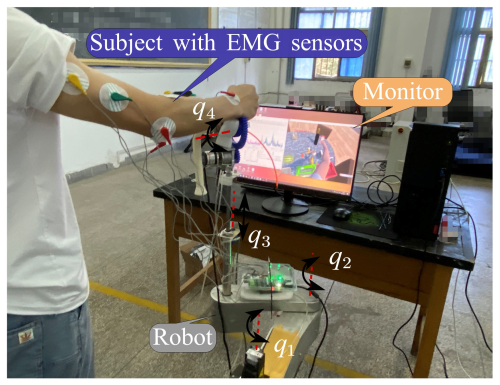

3.1 Experiment of the transparency of the robot

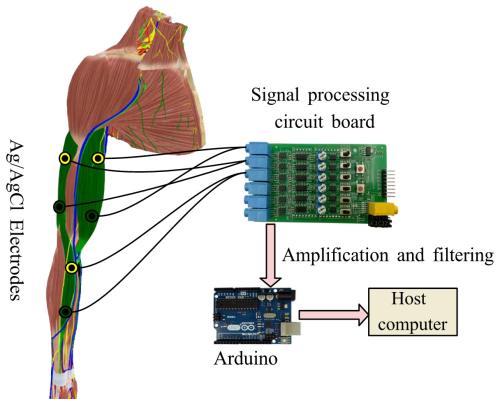

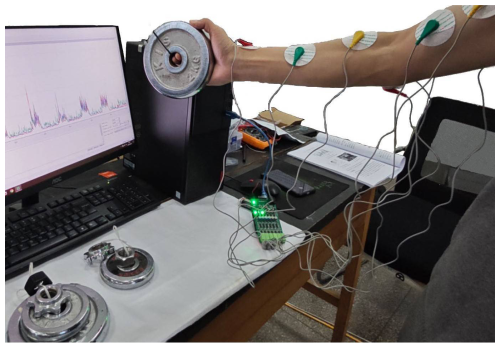

A healthy volunteer was informed of the precautions and safety operations during the experimental process, and they expressed their agreement to participate in the proposed ADL training experiment. Electromyography (EMG) signals from the biceps brachii, triceps brachii, and brachialis flexor muscles in the upper extremities are measured during training to show active force. Muscles chosen for EMG collection are highlighted in green, as shown in Fig. 6. The EMG signal sensor is a six-channel electromyographic sensor that includes a signal amplification and filtering circuit board and an Arduino control board.

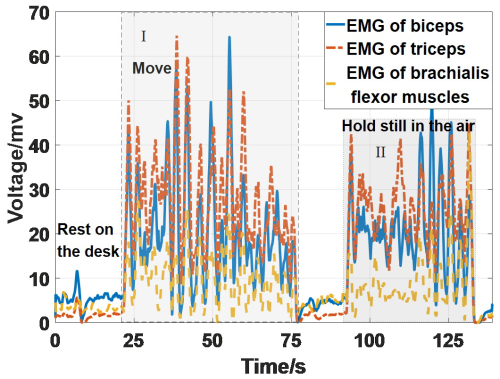

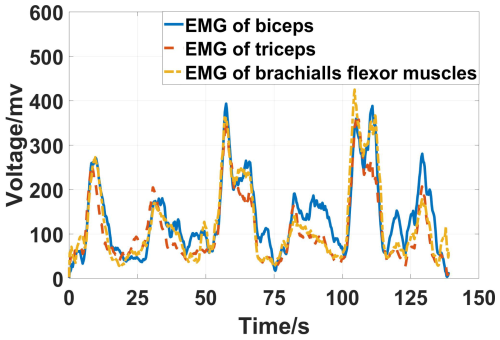

To verify the transparency of the robot, the control experiment was conducted. Firstly, the subject moves their upper limb not coupled with the robot. The EMG signals are shown in Fig. 7. Section I represents the signals while moving the arm and section II with the arm hold still in the air. If resting the arm on the desk, the signals will be very small. It can be seen that the EMG sensor device can reflect changes in muscle strength, and the signal strength is all below 70 mV and mainly between 10 and 40 mV.

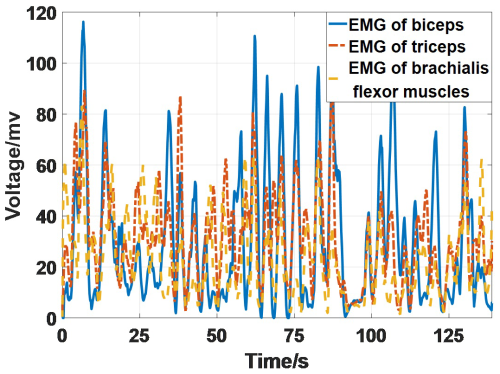

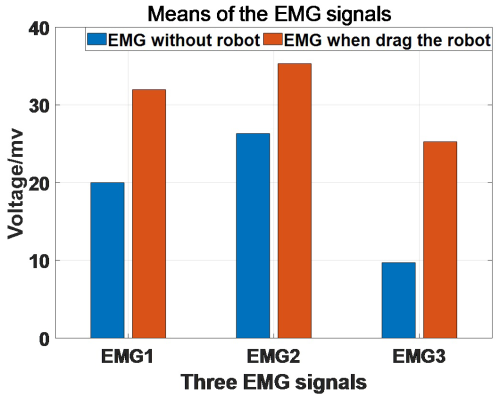

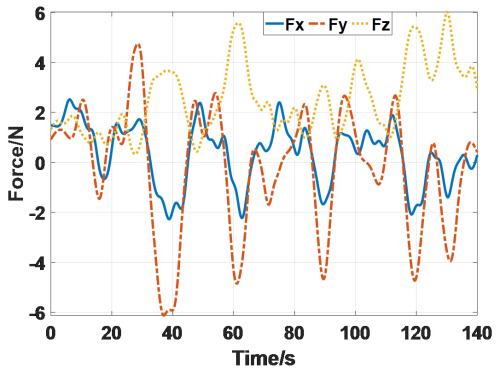

Second, drag the robot to move under the compensation. The EMG signals are shown in Fig. 8. Obviously, the signals are stronger than those in Fig. 7. Figure 9 shows the means of the signals while in motion of the two scenarios. EMG1 represents the EMG of the biceps, EMG2 represents the EMG of the triceps, and EMG3 represents the EMG of the brachialis flexor muscle. The dragging motion truly needs more strength to move with the robot, but the increment is not significant. The interactive force is shown in Fig. 10. Fx, Fy, and Fz are the interactive forces of the x, y, and z directions that are measured by the force sensor. The forces are smaller than 6 N. Therefore, it can be considered that the robot has good transparency.

3.2 Experiment with the virtual ADL scene

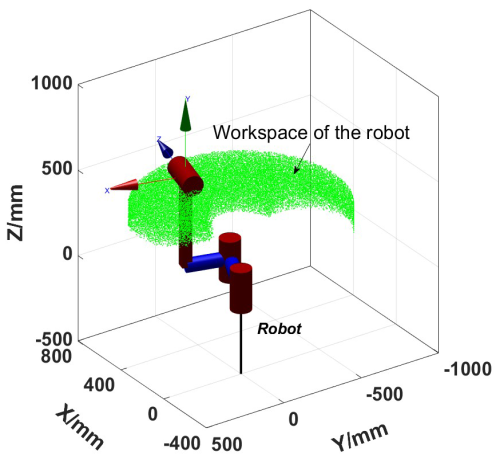

Firstly, start the robot system and move each joint of the robot to its initial position, ensuring that the end can move in six directions. The initial configuration and the workspace of the robot are shown in Fig. 11. The cylinder extends at a maximum stroke because of the action of moving down to pick up an object. Then, start the virtual scene to connect to the robot system.

Figure 12(a) Picking up the juice. (b) Placing the juice in the box. (c) The training is about to be completed.

The subject first picked up a box of juice and planned to put it in the left box. The three moments during training are shown in Fig. 12. Among them, Fig. 12a represents the moment when the juice is picked up, Fig. 12b represents the moment when the juice is placed in the box, and Fig. 12c represents the moment when the task is about to be completed. Encouraging prompts and images will appear in the interface, disappear after 6 s, and reappear the next time the item is successfully placed in the box. At the same time, there will also be an encouragement speech and successful sound effects to attract the participant. The air pressure in the rod chamber of the cylinder will vary depending on the picked-up item, and the weight of the item will only be felt when it is picked up. Because different mass attributes are set for each item in Unity, picking different objects will give the subject a different sense of gravity. Combined with the transparency of the robot, the training gives the subject a more realistic sense of presence.

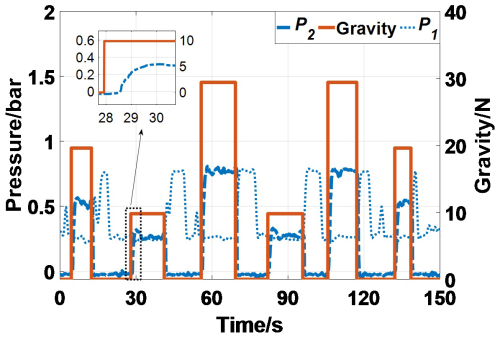

The air pressures of the linear cylinder during the experiment are measured and shown in Fig. 13. The virtual items that are picked up are juice, vanilla, soda, watermelon, soda, and juice. The blue lines in the figure show the air pressures of the cylinder. The blue dashed line indicates that the air pressure in the rod chamber of the cylinder coincides with the change of the object's gravity (solid red line), so the subject will feel different vertical downward forces while dragging the cylinder. From the partially enlarged image pointed at by the arrow, it can be seen that the response time of the air pressure is about 1 s. Basically, it will not affect the training experience of the subjects. The pressure of the rodless chamber is not zero for the compensation of initial load and static friction.

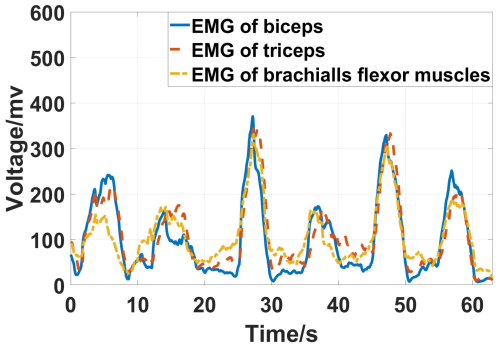

The collected EMG signals are shown in Fig. 14. The six peaks represent muscle strength when picking up six items. It can be seen that changes in muscle strength of the upper limbs during training are consistent with the expectations of the designed strategy. When picking up an item with a mass of 1 kg, the amplitude of the EMG signal is 200 mV. When the mass of the item is 2 kg, and the amplitude of the EMG signal is between 200 and 300 mV. When picking up an item with a mass of 3 kg, the amplitude of the EMG signal is between 300 and 400 mV.

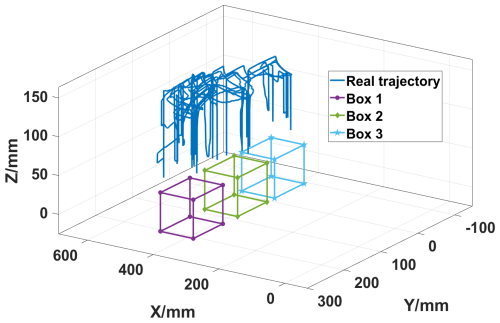

During the ADL training process, the trajectory of the robot end is shown in Fig. 15. The solid blue line in the figure represents the trajectory of the robot end, and the three cubes represent the three boxes in the virtual scene. It can be seen that the motion consisted of moving up and down in the vertical plane and translation to the horizontal plane. Figure 16 shows the entire training system and the experiment scene.

We used dumbbell sets weighing 1, 2, and 3 kg to test the changes in upper-limb EMG signals during the process of picking up and putting down. The variations in the EMG signal obtained during the sequence pick-up test are shown in Fig. 17, and the experimental conditions are depicted in Fig. 18. From Figs. 14 and 17, it can be observed that the gravitational force transferred to the end of the robot based on the mass of virtual objects and that the upper-limb EMG signals elicited when picking up the dumbbells of the same weight exhibit similar trends. Moreover, for different weights, the amplitudes of the corresponding EMG signals in the two experimental contexts are very close. Lifting a 1 kg weight corresponds to an amplitude close to 200 mV, around 270 mV for a 2 kg weight, and close to 400 mV for a 3 kg weight. A comparative analysis of the experimental results indicates that the ADL training strategy employed in this study is feasible, and the real-time gravity feedback closely approximates real-life scenarios.

In this research, a display screen featuring virtual training scenes was utilized in place of HMD devices, which helped reduce training costs and avoided any discomfort to the user's head. The utilization of virtual human models appeared to be more realistic and appealing, and the limb bending and stretching facilitated by adding inverse dynamics algorithms to the model were also more similar to the actual upper-limb motion posture. This approach was found to be more effective than the visual stimulation effect of only displaying one hand on the interface. While controlling virtual tasks, a head-turning action was also incorporated, which brought about a change in perspective when picking up objects from different positions or placing boxes in various locations. This was similar to the perspective change experienced while wearing an HMD and was also more aligned with the actual state of daily activities, facilitating a more immersive training experience.

In addition to visual and auditory stimuli, increasing force feedback further integrates physical and virtual systems. Basalp et al. (2019, 2020) designed a virtual rowing system for upper-limb training which can provide users with visual, tactile, and auditory feedback. At the same time, to meet the needs of different tasks, the system also allows users to adjust the density of virtual water and change the interaction force between water and pulp. The system has a strong presence and can satisfy users' more intensive training requirements, but its tactile feedback system requires accurate identification of the dynamic 220 parameters of the entire system, which is complex. Keller et al. (2016) designed a 6-DOF exoskeleton robot for upper-limb rehabilitation for adolescents. This system integrates computer games with rehabilitation, enhancing participant engagement; however, the feedback is not yet real.

This article presents active training strategies for picking up and placing common objects encountered in daily life. Force feedback is provided based on the weight of the object, which creates multi-channel stimuli. The force feedback is generated by vertically moving cylinders, which are simple and conform to the directional characteristics of gravity. The experimental results indicate that the reaching motion is easy due to the transparency of the robot, and picking up different items can significantly improve the muscle strength of the upper limbs. Moreover, compared to the experiment with picking up dumbbells, it can be concluded that the proposed training system has a high degree of matching in terms of vision and force perception.

This article presents a novel approach to rehabilitation training by combining virtual reality technology and rehabilitation robots. The goal is to design an active training strategy for ADL that provides multiple-information feedback and enhances upper-limb motor abilities. The training involves picking and placing activities that simulate daily activities and help patients exercise their muscles in the upper extremities. Virtual scenes offer engaging visual and auditory stimuli for participants, enhancing motivation and training effectiveness. The rehabilitation robot receives data from virtual scenes to provide force feedback, creating a sense of presence for the participants and improving neural stimulation channels. This approach is expected to help patients in the later stages of a stroke to improve their muscle strength and regain their upper-limb motor function.

The main contributions of this work are summarized as follows: (1) an ADL rehabilitation training strategy for the upper extremities is proposed. (2) The training provides participants with a sense of presence through vision, hearing, and force feedback.

The data that support the findings of this study are available from the corresponding author upon reasonable request.

LL and XL conceived the idea. LL and TL wrote and debugged the experimental code. LL drafted the paper; JL and LL discussed and edited the paper. LL finalized the paper. LL and XL provided financial and project support for the experiment.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors are grateful to the anonymous reviewers and the editor for their comments on and suggestions for improving our paper.

This document is the result of a research project funded by the Doctoral Scientific Research Foundation of Hubei University of Automotive Technology (grant no. 224035) and the Henan Provincial Science and Technology Research Project (grant no. 212102310890).

This research has been supported by the Doctoral Scientific Research Foundation of Hubei University of Automotive Technology (grant no. 224035) and the Henan Provincial Science and Technology Research Project (grant no. 212102310890).

This paper was edited by Zi Bin and reviewed by two anonymous referees.

Abdel Majeed, Y., Awadalla, S., and Patton, J. L.: Effects of robot viscous forces on arm movements in chronic stroke survivors: a randomized crossover study, J. NeuroEng. Rehabil., 17, 1–9, https://doi.org/10.1186/s12984-020-00782-3, 2020.

Archambault, P. S., Norouzi-Gheidari, N., Kairy, D., Levin, M. F., Milot, M., Monte-Silva, K., Sveistrup, H., and Trivino, M.: Upper extremity intervention for stroke combining virtual reality, robotics and electrical stimulation, 2019 International Conference on Virtual Rehabilitation (ICVR), 21–24 July 2019, Tel Aviv, Israel, 1–7, https://doi.org/10.1109/ICVR46560.2019.8994650, 2019.

Bardorfer, A., Munih, M., Zupan, A., and Primozic, A.: Upper limb motion analysis using haptic interface, IEEE-ASME T. Mech., 6, 253–260, https://doi.org/10.1109/3516.951363, 2001.

Basalp, E., Marchal-Crespo, L., Rauter, G., Riener, R., and Wolf, P.: Rowing simulator modulates water density to foster motor learning, Front. Robot. AI, 6, 74, https://doi.org/10.3389/frobt.2019.00074, 2019.

Basalp, E., Bachmann, P., Gerig, N., Rauter, G., and Wolf, P.: Configurable 3D rowing model renders realistic forces on a simulator for indoor training, Appl. Sci., 10, 734, https://doi.org/10.3390/app10030734, 2020.

Bortone, I., Leonardis, D., Mastronicola, N., Crecchi, A., Bonfiglio, L., Procopio, C., Solazzi, M., and Frisoli, A.: Wearable Haptics and Immersive Virtual Reality Rehabilitation Training in Children With Neuromotor Impairments, IEEE T. Neur. Sys. Reh., 26, 1469–1478, https://doi.org/10.1109/TNSRE.2018.2846814, 2018.

Broeren, J., Rydmark, M., and Sunnerhagen, K. S.: Virtual reality and haptics as a training device for movement rehabilitation after stroke: a single-case study, Arch. Phys. Med. Rehab., 85, 1247–1250, https://doi.org/10.1016/j.apmr.2003.09.020, 2004.

Caldas, O. I., Aviles, O. F., and Rodriguez-Guerrero, C.: Effects of presence and challenge variations on emotional engagement in immersive virtual environments, IEEE T. Neur. Sys. Reh., 28, 1109-1116, https://doi.org/10.1109/TNSRE.2020.2985308, 2020.

Chatterjee, K., Buchanan, A., Cottrell, K., Hughes, S., Day, T. W., and John, N. W.: Immersive virtual reality for the cognitive rehabilitation of stroke survivors, IEEE T. Neur. Sys. Reh., 30, 719–728, https://doi.org/10.1109/TNSRE.2022.3158731, 2022.

Dehem, S., Gilliaux, M., Stoquart, G., Detrembleur, C., Jacquemin, G., Palumbo, S., Frederick, A., and Lejeune, T.: Effectiveness of upperlimb robotic-assisted therapy in the early rehabilitation phase after stroke: A single-blind, randomised, controlled trial, Annals of Physical and Rehabilitation Medicine, 62, 313–320, https://doi.org/10.1016/j.rehab.2019.04.002, 2019.

Di Diodato, L. M., Mraz, R., Baker, S. N., and Graham, S. J.: A haptic force feedback device for virtual reality-fMRI experiments, IEEE T. Neur. Sys. Reh., 15, 570–576, https://doi.org/10.1109/TNSRE.2007.906962, 2007.

Dobek, J. C., White, K. N., and Gunter, K. B.: The effect of a novel ADL-based training program on performance of activities of daily living and physical fitness, J. Aging Phys. Activ., 15, 13–25, https://doi.org/10.1123/japa.15.1.13, 2007.

Feys, H. M., De Weerdt, W. J., Selz, B. E., Cox Steck, G. A., Spichiger, R., Vereeck, L. E., Putman, K. D., and Van Hoydonck, G. A. J. S.: Effect of a therapeutic intervention for the hemiplegic upper limb in the acute phase after stroke: a single-blind, randomized, controlled multicenter trial, Stroke, 29, 785–792, https://doi.org/10.1161/01.STR.29.4.785, 1998.

Gerber, S. M., Müri, R. M., Mosimann, U. P., Nef, T., and Urwyler, P.: Virtual reality for activities of daily living training in neurorehabilitation: a usability and feasibility study in healthy participants, 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 18–21 July 2018, Honolulu, HI, USA, 1–4, https://doi.org/10.1109/EMBC.2018.8513003, 2018.

Goršic, M., Cikajlo, I., Goljar, N., and Novak, D.: A Multisession Evaluation of a Collaborative Virtual Environment for Arm Rehabilitation, PRESENCE: Virtual and Augmented Reality, 27, 274–286, https://doi.org/10.1162/pres_a_00331, 2020.

Huang, H.-C., Chung, K.-C., Lai, D.-C., and Sung, S.-F.: The impact of timing and dose of rehabilitation delivery on functional recovery of stroke patients, J. Chin. Med. Assoc., 72, 257–264, https://doi.org/10.1016/S1726-4901(09)70066-8, 2009.

Ibrahim, H., Hassan, H., and Shalaby, R.: A review of upper limb robot assisted therapy techniques and virtual reality applications, IAES International Journal of Artificial Intelligence (IJ-AI), 11, 613, https://doi.org/10.11591/ijai.v11.i2.pp613-623, 2022.

Keller, U., van Hedel, H. J., Klamroth-Marganska, V., and Riener, R.: ChARMin: The first actuated exoskeleton robot for pediatric arm rehabilitation, IEEE-ASME T. Mech., 21, 2201–2213, https://doi.org/10.1109/TMECH.2016.2559799, 2016.

Kim, B., Ahn, K.-H., Nam, S., Hyun, D. J. J. R., and Systems, A.: Upper extremity exoskeleton system to generate customized therapy motions for stroke survivors, Robot. Auton. Syst., 154, 104128, https://doi.org/10.1016/j.robot.2022.104128, 2022.

Li, L., Han, J., Li, X., Guo, B., Wang, X., and Du, G.: A novel end-effector upper limb rehabilitation robot: Kinematics modeling based on dual quaternion and low-speed spiral motion tracking control, Int. J. Adv. Robot. Syst., 2022, 19, https://doi.org/10.1177/17298806221118855, 2022.

Malukhin, K. and Ehmann, K.: Mathematical modeling and virtual reality simulation of surgical tool interactions with soft tissue: A review and prospective, Journal of Engineering and Science in Medical Diagnostics and Therapy, 1, 020802, https://doi.org/10.1115/1.4039417, 2018.

Marchal-Crespo, L., Rappo, N., and Riener, R.: The effectiveness of robotic training depends on motor task characteristics, Exp. Brain Res., 235, 3799–3816, https://doi.org/10.1007/s00221-017-5099-9, 2017.

Mostajeran, M., Alizadeh, S., Rostami, H. R., Ghaffari, A., and Adibi, I. J. N. S.: Feasibility and efficacy of an early sensory-motor rehabilitation program on hand function in patients with stroke: a pilot, single-subject experimental design, Neurol. Sci., 45, 2737–2746, https://doi.org/10.1007/s10072-023-07288-5, 2024.

Nagappan, P. G., Chen, H., and Wang, D.-Y. J. M. M. R.: Neuroregeneration and plasticity: a review of the physiological mechanisms for achieving functional recovery postinjury, Military Medical Research, 7, 1–16, https://doi.org/10.1186/s40779-020-00259-3, 2020.

Nath, D., Singh, N., Saini, M., Banduni, O., Kumar, N., Srivastava, M. P., and Mehndiratta, A.: Clinical potential and neuroplastic effect of targeted virtual reality based intervention for distal upper limb in post-stroke rehabilitation: a pilot observational study, Disabil. Rehabil., 46, 2640–2649, https://doi.org/10.1080/09638288.2023.2228690, 2024.

Norouzi-Gheidari, N., Hernandez, A., Archambault, P. S., Higgins, J., Poissant, L., and Kairy, D.: Feasibility, Safety and Efficacy of a Virtual Reality Exergame System to Supplement Upper Extremity Rehabilitation Post-Stroke: A Pilot Randomized Clinical Trial and Proof of Principle, Int. J. Env. Res. Pub. He., 17, 113, https://doi.org/10.3390/ijerph17010113, 2019.

Oztop, E., Kawato, M., and Arbib, M. A. J. N. L.: Mirror neurons: functions, mechanisms and models, Neurosci. Lett., 540, 43–55, https://doi.org/10.1016/j.neulet.2012.10.005, 2013.

Rojo, A., Santos-Paz, J. Á., Sánchez-Picot, Á., Raya, R., and García-Carmona, R.: FarmDay: A Gamified Virtual Reality Neurorehabilitation Application for Upper Limb Based on Activities of Daily Living, Appl. Sci., 12, 7068, https://doi.org/10.3390/app12147068, 2022.

Shin, J. H., Ryu, H., and Jang, S. H.: A task-specific interactive game-based virtual reality rehabilitation system for patients with stroke: a usability test and two clinical experiments, J. NeuroEng. Rehabil., 11, 1–10, https://doi.org/10.1186/1743-0003-11-32, 2014.

Sigrist, R., Rauter, G., Riener, R., and Wolf, P.: Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review, Psychon. B. Rev., 20, 21–53, https://doi.org/10.3758/s13423-012-0333-8, 2013.

Soundararajan, N., Karne, R. K., Wijesinha, A. L., Ordouie, N., and Rawal, B. S.: A Novel Client/Server Protocol for Web-based Communication over UDP on a Bare Machine, 2020 IEEE Student Conference on Research and Development (SCOReD), Batu Pahat, Malaysia, 27–29 September 2020, 122–127, https://doi.org/10.1109/SCOReD50371.2020.9251017, 2020.

Sveistrup, H.: Motor rehabilitation using virtual reality, J. NeuroEng. Rehabil., 1, 1–8, https://doi.org/10.1186/1743-0003-1-10, 2004.

Tao, G., Garrett, B., Taverner, T., Cordingley, E., and Sun, C.: Immersive virtual reality health games: a narrative review of game design, J. NeuroEng. Rehabil., 18, 1–21, https://doi.org/10.1186/s12984-020-00801-3, 2021.

Triandafilou, K. M., Tsoupikova, D., Barry, A. J., Thielbar, K. N., Stoykov, N., and Kamper, D. G.: Development of a 3D, networked multi-user virtual reality environment for home therapy after stroke, J. NeuroEng. Rehabil., 15, 1–13, https://doi.org/10.1186/s12984-018-0429-0, 2018.

Turolla, A., Dam, M., Ventura, L., Tonin, P., Agostini, M., Zucconi, C., Kiper, P., Cagnin, A., and Piron, L.: Virtual reality for the rehabilitation of the upper limb motor function after stroke: a prospective controlled trial, J. NeuroEng. Rehabil., 10, 85, https://doi.org/10.1186/1743-0003-10-85, 2013.

Wright, Z. A., Lazzaro, E., Thielbar, K. O., Patton, J. L., and Huang, F. C.: Robot training with vector fields based on stroke survivors' individual movement statistics, IEEE T. Neur. Sys. Reh., 26, 307–323, https://doi.org/10.1109/TNSRE.2017.2763458, 2017.