the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Development of a flexible endoscopic robot with autonomous tracking control ability using machine vision and deep learning

Sen Qian

Jianxi Zhang

Zongkun Pei

Xiantao Sun

Zhe Wu

A flexible endoscopic robot is designed to solve the problem that it is difficult for auxiliary doctors to maintain a stable visual field in traditional endoscopic surgery. Based on geometric derivation, a motion control method under the constraint of the remote center motion (RCM) of the robot system is established, and a set of circular trajectories are planned for it. The RCM error of the robot during operation and the actual trajectory of the robot end in three-dimensional space are obtained through the motion capture system. The end of the robot is controlled by the heterogeneous primary–secondary teleoperation control algorithm based on position increments. Finally, the RTMDet deep learning object detection algorithm was selected to identify and locate surgical instruments through comparative experiments, and the autonomous tracking control was completed based on visual guidance. In the process of autonomous tracking, the RCM error was less than 1 mm, which met the actual surgical requirements.

- Article

(8762 KB) - Full-text XML

- BibTeX

- EndNote

Compared with traditional open surgery, endoscopic minimally invasive surgical robots (EMISRs) have many advantages, such as less surgical trauma, less blood loss and faster recovery (Ejaz et al., 2014; Zenoni et al., 2013). The research of endoscopic minimally invasive surgical robots not only has high academic value but also has a broad market prospect and great economic benefits (Dhumane et al., 2011; Gomes, 2011). Many well-known universities, enterprises and other institutions have started to conduct in-depth research on endoscopic surgical robots, which involves various aspects such as mechanical design, kinematic modeling, drive compensation, motion control, force sensing and automatic tracking.

With the improvements in accuracy and reliability, endoscopic surgical robotic systems have achieved more significant research results, and the related achievements have been put into use in hospitals, providing great help to doctors. For example, based on the AESOP surgical system, the Computer Motion company developed the ZEUS surgical system and performed the famous removal procedure “Lindbergh operation” (Guthart and Salisbury, 2000; Sung and Gill, 2001). The da Vinci surgical system was successfully developed by Intuitive Surgical in the United States, which has been approved by the FDA for use in hospitals (Brahmbhatt et al., 2014; Chen et al., 2018; Freschi et al., 2013). Tianjin University and Tianjin Medical University jointly developed the surgical robot “MicroHand S” system (Li et al., 2005; Liang et al., 2018; Su et al., 2018), which adopts a primary–secondary heterogeneous spatial mapping model and has virtual force feedback function.

Compared with traditional rigid joints, the flexible continuum mechanism has the advantages of light weight, high integration and high flexibility of motion. Olympus Medical, Japan, developed a flexible endoscope whose bending motion is controlled by a set of wires (Zhang et al., 2011). Based on this, several flexible instruments and endoscopes have been developed by related organizations in Berkelman and Ma (2009), Ding et al. (2012), Dai et al. (2019), Wang et al. (2023) and Kanno et al. (2014). A flexible laparoscopic robot was proposed which can capture images flexibly in Tewari et al. (2013).

Flexible endoscopic robots are constrained by the remote center of motion (RCM) during motion. Dedicated medical surgical robots generally use special mechanical structures (e.g., parallelogram mechanism or spherical mechanism) to achieve the RCM constraint (Tewari et al., 2013). Since medical surgical robots are expensive, in this paper, we want to use a general-purpose robotic arm instead of a dedicated surgical robot and implement the RCM constraint using a programmable algorithm (Sandoval et al., 2016; Sadeghian et al., 2019; Aghakhani et al., 2013).

The main contribution of this research is to realize the motion planning and primary–secondary teleoperation control of the flexible endoscope robot under RCM constraints on the basis of the structure of the flexible endoscope robot and select the appropriate deep learning algorithm to complete the autonomous tracking function. Firstly, the inverse kinematics solution of the robot arm under the constraint of RCM is derived, and the trajectory planning of the flexible endoscope robot is verified. In addition, considering the preoperative position and teleoperation control strategy, a primary–secondary teleoperation control system constrained by RCM was established. At the same time, machine vision and deep learning technology were applied to the flexible endoscope robot, and the autonomous tracking control strategy based on vision guidance is proposed and verified. The details are as follows: Sect. 1 is an introduction, Sect. 2 details the flexible endoscopic robot design, Sect. 3 details a flexible endoscopic robot motion planning and teleoperation experiment, Sect. 4 details a flexible endoscopic robot autonomous tracking control experiment, and Sect. 5 concludes the paper.

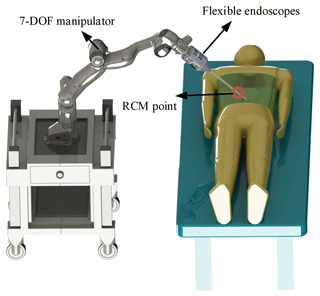

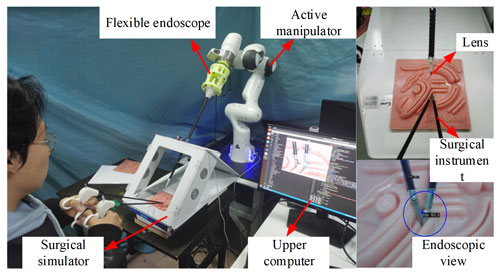

In traditional minimally invasive endoscopic surgery, the movement flexibility of the endoscope is limited by the telecentric point, and it is difficult to flexibly adjust the specific position of the end lens in the abdominal cavity. Therefore, in order to enhance the movement flexibility of the endoscope and the stability of the visual field and meet the requirements of doctors for the surgical visual field, the structural design of Shiyang Bao (2023) is quoted in this paper, and the RCM constraint is realized using a universal robotic arm. The overall structure is shown in Fig. 1.

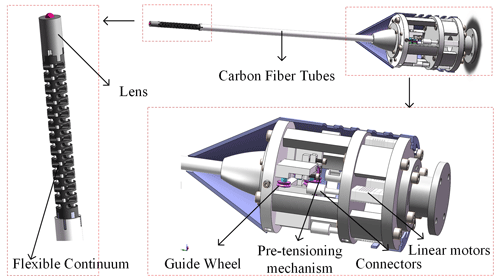

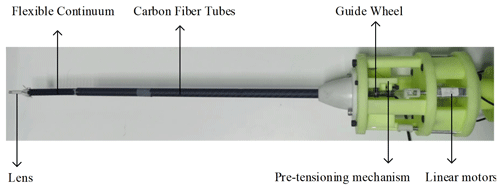

The overall structure of the 2 degrees of freedom (DOF) flexible endoscope actuator is shown in Fig. 2, which specifically includes a flexible continuum, a lens, a carbon fiber tube, a preload mechanism, a guide wheel, a micro linear motor and a fixing device. Four micro linear motors are evenly arranged, with one section of the flexible cable connected to the micro linear motor and the other end passing through the guide wheel and the carbon fiber tube and finally passing through the flexible continuum fixed with a threaded aluminum sleeve. The preload mechanism consists of preload slider and preload screw. By rotating the preload screw, it drives the preload slider to move, which drives the guide wheel to move to adjust the tension force and ensure the accuracy of the flexible continuum movement. In order to facilitate the assembly and replacement of parts, the linear motor and preload mechanism are installed in layers. The actual prototype is shown in Fig. 3.

Take laparoscopic surgery as an example: the doctor will first establish artificial pneumoperitoneum to obtain a large surgical space, and then open two to three intervention holes in the patient's abdominal cavity, and the end effector will enter the appropriate position of the patient's abdominal cavity through the intervention holes under the positioning of the mechanical arm. In general, the point of contact between the end effector and the abdominal cavity is called the telecentric point, which limits the 2 translational degrees of freedom of the actuator in addition to insertion. During the movement of the robot arm, its end effector must always pass through this fixed point, otherwise it will cause damage to the patient's abdominal cavity and endanger the patient's life.

At present, most surgical robots adopt a special mechanical structure design, such as the da Vinci surgical robot; because of its special robotic arm configuration design, the da Vinci robotic arm can naturally meet the constraints of the telecentric point when moving, without much consideration of software algorithms. Since da Vinci and other special medical robots are too expensive, this paper considers using common universal robotic arms instead, which requires the solution of the inverse kinematics of the robotic arms under RCM constraints.

3.1 Kinematic analysis of the robotic arm

3.1.1 Positive kinematic analysis of the robotic arm

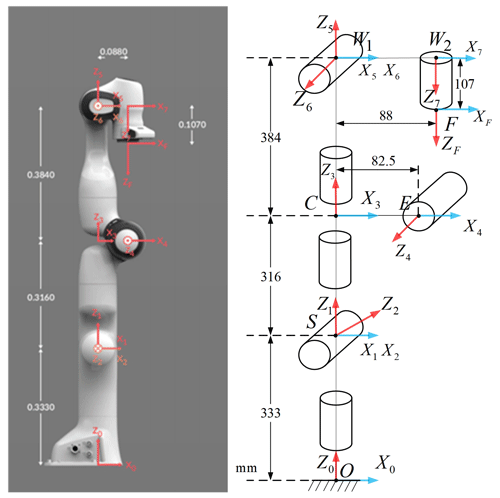

In this paper, the Franka–Emika robotic arm is studied for kinematic modeling according to the improved Denavit–Hartenberg (D-H) method, as shown in Fig. 4.

Assume that the transformation matrix of coordinate systems between adjacent links is T and i−1Ti denotes the transformation of the robot arm coordinate system {i} with respect to the coordinate system {i−1}. The general expression of i−1Ti is as follows:

3.1.2 Inverse kinematic analysis of robotic arm under RCM constraints

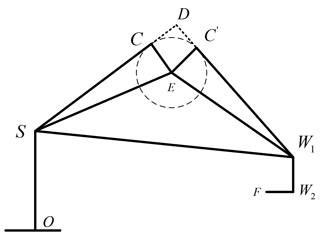

As can be seen from the robotic arm modeling, a3 and a4 are elbow bias, and a6 is wrist bias, which does not meet the Piper criterion in robot kinematics. In this paper, the fixed-arm-shape angle parameter method is adopted to solve the inverse kinematics (Wang et al., 2022). When the pose of the end effector is known, the joints of the robotic arm will rotate at a certain angle, as shown in Fig. 5.

In ΔDSW1, the equation can be obtained according to the law of cosine:

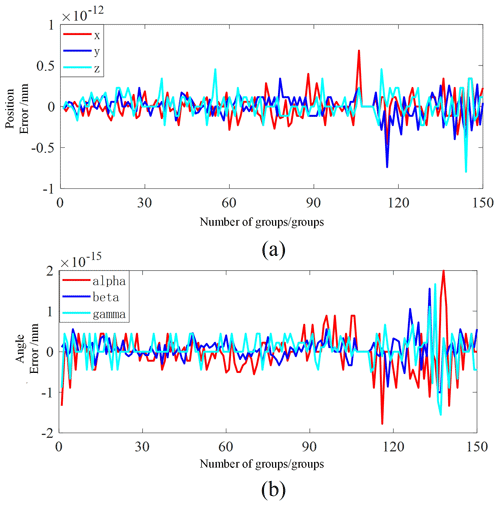

Here θ7 is given in advance, and after the position of W1 is obtained, the inverse kinematics solution of the manipulator can be obtained by the method of fixed-arm-shape Angle parameters. In this paper, the plane formed by points S, E and W1 is called the arm plane. When θ3=0, the plane formed by points O, S and W1 is defined as the reference plane, and the arm angle φ is the angle between the arm plane and the reference plane, as shown in Fig. 6.

The actual motion of the robot arm can be seen as first reaching the target position in the reference plane and then rotating around the SW1 axis by a certain angle.

where , and denote the joint angle in the reference plane, while and denote the rotation matrix in the reference plane.

By solving the equation, one obtains . Since the Y coordinate of the vector is 0, the following equation can be obtained:

At this point, , and are known, and bringing them into Eq. (10) yields .

Bringing Eq. (19) into Eq. (25),

The arm shape angle φ has a correspondence with the joint angle θ7. When the joint angle θ7 is given, the arm shape angle φ is also determined.

Applying the trigonometric constant transformations to the equations yields

The φ in Eq. (40) can be obtained by calculating and then substituting φ into the equation for all joint angles.

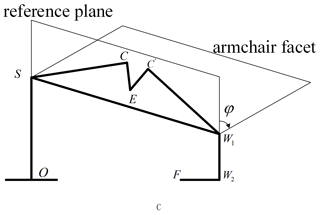

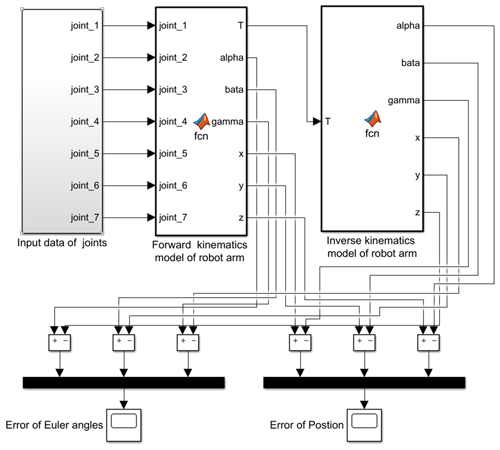

3.1.3 Kinematic verification of the robotic arm

The robot arm in this paper has a series rigid form, and the forward kinematics derivation and verification are relatively simple. The forward kinematics calculation results can be verified by inputting specific joint angles and comparing them with the real pose of the robot arm. This section mainly verifies the correctness of its inverse kinematics.

The basic process of the inverse kinematics verification of the manipulator is as follows: first, the forward and inverse kinematics function modules of the manipulator are established in the Simulink environment of MATLAB software. Secondly, given several joint angles within the limit of joint angles of the manipulator arm, the pose matrix of the end of the manipulator arm is calculated through forward kinematics, and the pose matrix is represented by position and Euler angle. Then the inverse solution of the pose matrix calculated by the forward kinematics is obtained, and the pose matrix is obtained by the forward kinematics and expressed by the position and Euler angle. Finally, the position obtained twice is compared with the Euler angle. If the results obtained twice are consistent, the correctness of the inverse kinematics can be verified.

The inverse kinematics simulation model of the manipulator is shown in Fig. 7. The position error obtained twice before and after is shown in Fig. 8a, and the Euler angle error is shown in Fig. 8b. Since MATLAB itself retains accuracy and effective floating point calculation, the calculation error of inverse kinematics can be considered to be 0 according to the order of magnitude of error of simulation results, thus verifying the correctness of inverse kinematics.

3.2 Inverse kinematic analysis of the robot arm under RCM constraints

The end of the mechanical arm, the center of the telecentric fixed point and the target point are in the same line. The following formula can therefore be obtained:

In Eq. (41), pa is the end coordinate of the robotic arm, pt is the target point coordinate, p0 is the coordinates of the telecentric immobile point and l is the length from the end of the robotic arm to the end of the endoscope.

The orientation of the X and Y axes of the end coordinate system of the robot arm is irrelevant because the endoscope and the end Z axis are coaxial, which means that it only affects the angle of the seventh axis. After obtaining the angle of each joint of the robot arm, θ7 is directly used as the joint angle of the seventh axis. The rotation matrix R of the end of the robot arm can be obtained by the equivalent rotation axis, and the end coordinate system Z axis can be written as

The Z axis of the robot arm base coordinate system can be written as

Therefore, the equivalent rotation axis is

From the properties of the vector fork product of the above equation, kz=0,

in Eq. (45), and the equivalent rotation angle is

According to the conversion relationship between the equivalent rotation axis and the rotation matrix, it can be obtained that

In the above equation, , and the end position of the robot arm is known. The robot arm inverse kinematics can be solved through the robot arm at each joint angle.

3.3 Flexible endoscopic robot trajectory planning experiment

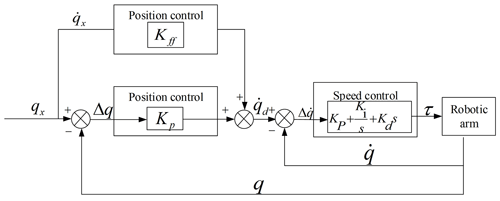

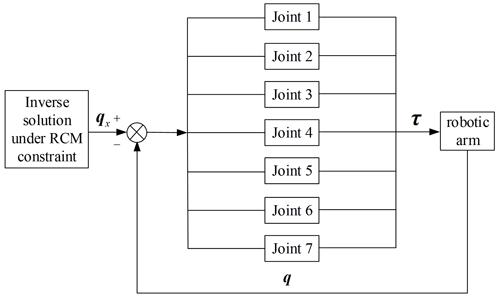

The Franka robotic arm has seven joints. When the target position of each joint is known, this paper adopts closed-loop control based on joint position and joint velocity to realize the control of the robot arm. The following takes the single-joint controller as an example to introduce the overall design framework of the single-joint motion controller, including the position control, speed control and the kinematics, as shown in Fig. 9.

The single-joint motion controller consists of three parts: velocity feedforward control, position PID control and velocity PID control. The calculation formula of velocity feedforward control and position PID control is as follows:

In Eq. (48), qx is the desired position of the joint, q is the actual position of the joint, Kp is the proportionality coefficient, Kff is the velocity feed-forward compensation coefficient and is the calculated desired velocity of the joint.

In Eq. (49), Kp, Ki and Kd are the proportional, integral and differential coefficients; τ is the joint torque; and τ is the control signal passed to the robotic arm controller to control the joint motion of the robotic arm. PID closed-loop control can improve the response speed of the robot arm under the RCM constraint, which makes the robot arm movement more continuous and smoother, reducing the error at the RCM constraint point.

3.3.1 Verification of flexible endoscopic robot trajectory planning under RCM constraints

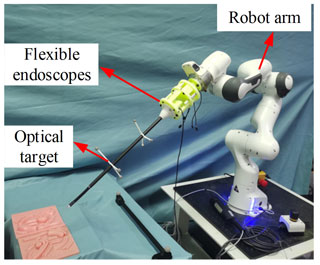

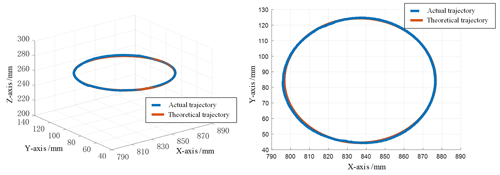

In order to verify the validity of kinematic analysis under RCM constraints, the experimental platform was firstly built as shown in Fig. 10, during which the RCM point position was recorded, and the flexible endoscope straight rod position was recorded in real time by installing an optical target point on the straight rod part of the flexible endoscope. Finally, the RCM error is calculated by calculating the distance from the RCM point to the straight line where the carbon fiber rod of the flexible endoscope is located, and the motion performance of the flexible endoscopic robot under the RCM constraint is evaluated. A set of circular trajectories with a radius of 40 mm is planned for the end of the flexible endoscopic robot. Compared with the linear or matrix trajectories, the circular trajectories are more complex, and the amplitude of the robot arm motion constraint is larger under the RCM, which has higher requirements on the control performance of the robot arm. The theoretical trajectory and the actual trajectory of the flexible endoscope robot are shown in Fig. 11.

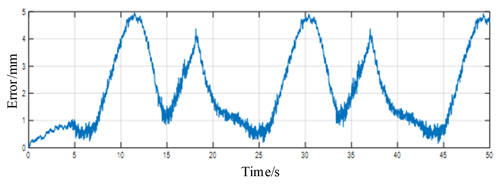

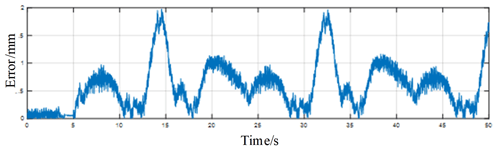

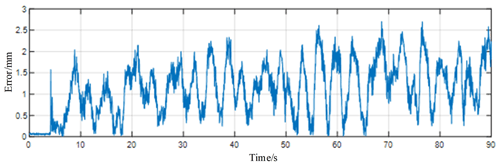

The experimental results are shown in Fig. 12, which is the error plot derived from the data recorded by the motion capture system. It can be seen that the error at the RCM constraint point is within 5 mm. In the real surgery, the position movement margin at the RCM point is about 10 mm, so it can be proved that the kinematic model based on the RCM point constraint adopted in this paper meets the surgery requirements.

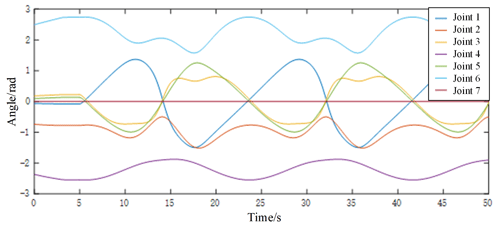

The reasons for the error at RCM constraint point are analyzed. One of the reasons is that the PID of the manipulator takes a certain time. Another reason is that the flexible endoscope system has errors during the 3D printing process and during assembly. Therefore, the data of each joint angle during the real-time movement of the robot arm are recorded during the experiment, as shown in Fig. 13. Each joint angle change during the real-time motion of the robot arm is periodic and free of vibration and shock, which is especially important for the safe and reliable operation of flexible endoscopic robots. Then the RCM point position and the flexible endoscope straight bar position are obtained through the positive kinematics of the robot arm, and finally the error of the RCM constraint point is calculated, as shown in Fig. 14. Experimental results show that the error of the RCM constraint point is less than 2 mm.

3.4 Algorithm and experiment of heterogeneous primary–secondary teleoperation control based on position increments

The flexible endoscopic robot requires preoperative positioning before the surgical procedure, and the technique of primary–secondary teleoperation can help the surgeon to perform preoperative positioning and find the specific location of the lesion very well. Therefore, primary–secondary teleoperation has great application value in flexible endoscopic surgery. In this paper, the primary–secondary heterogeneous teleoperation control algorithm based on position increments is used to achieve the accurate control of the end of the flexible endoscopic robot through the method of proportional mapping.

3.4.1 Primary–secondary teleoperation control algorithm

In this paper, the primary touch hand is used as the primary end device, and the secondary end is a flexible endoscopic robot. Meanwhile, the teleoperation should still be carried out under RCM constraints because the RCM constraint will produce hand–eye incoordination, which affects the quality of surgery. In order to solve the hand–eye coordination problem, the seventh joint of the robot arm is controlled alone (the seventh joint of the robot arm remains unchanged during the inverse solution). The block diagram of the teleoperation control under RCM constraints is shown in Fig. 15.

In the primary–secondary heterogeneous teleoperation control algorithm based on position increments, the zero position of the primary touch device is pm0, and the zero position of the end of the flexible endoscope robot is ps0. The zero point position of the end of the flexible endoscope robot is ps0, which is not fixed and needs to be adjusted according to actual surgical requirements. The actual position of the main touch device is pmc, and the actual position of the end of the flexible endoscope robot is psc. The primary–secondary mapping scheme based on location increments adopted in this paper is as follows:

The above formula indicates that the position increment of the current position of the primary device at a certain time relative to its zero position is (pmc−pm0). Considering the specific reasons such as primary–secondary heterogeneity and surgical application scenarios, it is necessary to multiply the scale factor k on the basis of the position increment to obtain the position increment of the secondary device relative to the zero position. At the same time, the mapping relationship between the joint angle of the main device and the seventh joint angle of the robot arm should also be multiplied by the corresponding proportional coefficient to ensure the safety of motion.

3.4.2 Primary–secondary teleoperation experiments and results

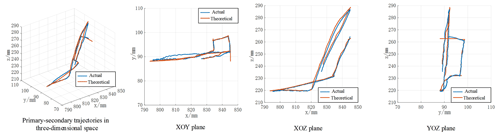

In order to test the primary–secondary tracking performance of the flexible endoscope robot, the following experiments are designed. The doctor operates the primary device to control the motion of the flexible endoscope robot and records the position information of the end of the endoscope robot. By comparing the theoretical position information recorded by the primary device with the actual end position information of the secondary endoscope robot, the primary–secondary three-dimensional space trajectory is drawn as shown in Fig. 16.

From Fig. 16, it can be seen that the flexible endoscopic robot can follow the specific position sent by the doctor for a good following motion, which can meet the basic requirements of the doctor. At the same time, the RCM constraint still has to be satisfied during the primary–secondary motion of the flexible endoscopic robot, and the RCM error is shown in Fig. 17 by specific calculation.

Compared with traditional handheld endoscopic operations, introducing a flexible endoscopic robot to endoscopic surgery to provide the surgeon with a surgical field of view is a more intelligent and convenient solution. In order to achieve the position adjustment of the flexible endoscope robot according to the visual image information fed back by the endoscope, the position adjustment of the flexible endoscope robot ensures that the surgical instruments are always located in the center of the surgical field of view, so as to realize the function of autonomous tracking of surgical instruments. It specifically needs to meet the following two conditions: first, the flexible endoscope needs to identify different types of surgical instruments and obtain the corresponding position information, and, second, it then drives the endoscope to move through the real-time dynamic adjustment of the robot arm position, so as to obtain a good surgical field of view.

4.1 Deep learning target detection algorithm selection

For the processing of endoscopic visual information, this section focuses on the identification and positioning of surgical instruments. Traditional visual recognition and positioning methods, such as the use of markers for visual detection to achieve recognition, are not suitable for endoscopic surgery due to the risk of cross-infection in the actual surgical application, and the accuracy and speed do not meet the requirements. Through the analysis of the above requirements, and in order to quickly and accurately identify and locate surgical instruments in the actual application process, this section selects several current mainstream target detection algorithms to conduct relevant performance tests and conducts comparative analysis from the aspects of accuracy, recall rate and inference speed, so as to determine the final deep learning target detection algorithm.

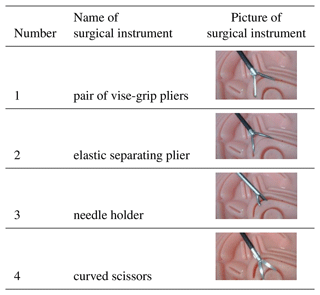

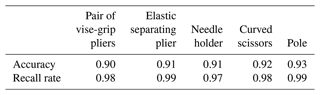

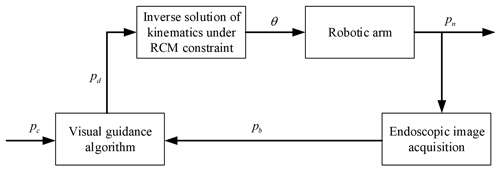

4.1.1 Data set creation

Due to the limited experimental conditions and the lack of real surgical materials in hospitals, this paper uses the Lap Game endoscopic surgery simulator as the data acquisition platform and selects several commonly used endoscopic surgical instruments. On this basis, with reference to the sampling and annotation methods of the relevant literature (Bawa et al., 2021, 2020), the relevant simulation surgery videos were recorded, and the images of different video frames were extracted at equal intervals through Python script files, which were used to evenly sample the image information of each stage of the surgery process, so as to obtain the endoscopic simulation surgery process data set. The relevant information is shown in Table 1.

In order to obtain the accurate position of the end of the surgical instrument, this paper sorts each surgical instrument into two areas, namely the end part of the surgical instrument and the straight rod part of the surgical instrument. It includes the pixel center position of the region and the pixel size of the region, as shown in Fig. 18.

After the data set was made, the rotating annotation tool roLabelImg was used to annotate the data set, and a data set with 1000 pictures and 4000 annotations was finally established, among which 2000 were annotated on the end of surgical instruments, and 2000 were annotated on the straight rods of surgical instruments.

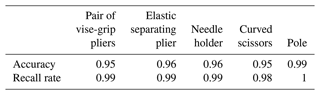

4.1.2 Comparison of deep learning target detection algorithms

Among the current mainstream deep learning target detection algorithms, three deep learning frameworks suitable for this paper, R3Det (Yang et al., 2021), Oriented R-CNN (Xie et al., 2021) and RTMDet (Lyu et al., 2022), are selected and compared and analyzed from several aspects.

Comparing the three algorithms, RTMDet is far more effective than the other networks in terms of accuracy and recall. In addition to that, the inference speed of RTMDet is 10 fps (frame rate), which is much higher than that of the other two networks: Oriented E-CNN (5.91 fps), and R3Det (5.65 fps), and meets the demand of real-time tracking.

4.2 Autonomous tracking algorithm for flexible endoscopic robots

Here the center pixel of the surgical instrument detection box is marked as , and the center pixel of the image is marked as . The goal of autonomous tracking is to get pb as close to the pc as possible to get a good view. In order to accurately calculate the exact position of a surgical instrument under the endoscopic coordinate system, it is first necessary to calibrate the monocular endoscope with its internal reference matrix and hand–eye calibration matrix:

Given the hand–eye calibration matrix above, the transformation relationship from the manipulator base coordinate system to the pixel coordinate system at a certain point in space can be obtained:

In the above equation, Zc is the depth information, f is the camera focal length, w is the width of the target object, and p is the pixel width of the target object in the picture. Similarly, the transformation relationship from the pixel coordinate system to the robot arm base coordinate system is expressed as

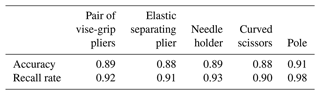

It should be noted that [R|T] in the above equation is the external parameter matrix, which needs to be left-multiplied by the pose matrix Ta of the end of the manipulator in the robot base coordinate system on the basis of the hand–eye calibration matrix Tb. The coordinate of the end center of the surgical instrument in the base coordinate system of the robot arm is pa2, the center coordinate of the camera field of vision is pa1, the end coordinate of the flexible endoscope robot is pt1 and the RCM point is po. It is assumed that the expected end coordinate of the flexible endoscope robot after adjustment is pt2.

Using the similar geometric relationship in Fig. 19, it can be obtained that the movement vector of the end of the instrument arm in the base coordinate system of the robot arm is

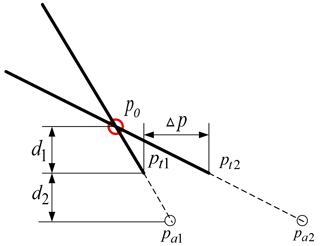

It should be explained that d1 and d2 are known in advance and remain unchanged in this article. Due to the large calibration error caused by the low pixel of the camera and the error of visual depth estimation, the above formula is essentially a proportional control method, in which the scale factor α determines the performance of the final tracking and realizes an autonomous tracking algorithm under specific conditions. The specific control framework is shown in the figure below.

In the figure above, pc is the target position of the surgical instrument center in the image, pb is the current position of the surgical instrument center in the image, pn is the current position of the end of the flexible endoscope robot and pd is the target position of the end of the flexible endoscope robot.

4.3 Experimental study on autonomous tracking of flexible endoscopic robot

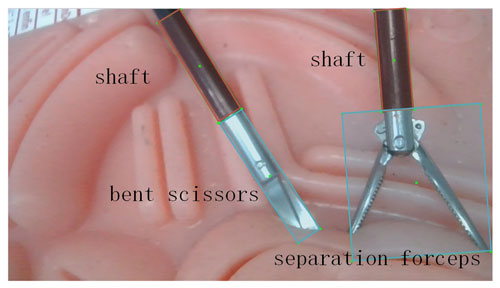

According to the analysis of common deep learning target detection models in the previous section, in the surgical instrument tracking experiment, the RTMDet algorithm was selected to generate the target recognition model, and the curved scissors and separation forceps were selected as experimental instruments. The pictures generated by the surgical simulator video were taken as the training set, and the strategy of marking the end of execution was adopted. A target recognition model is obtained which can return the center position coordinates of the detection frame in real time. Based on the motion control strategy introduced in the previous section, the simulation experiment platform as shown in Fig. 21 is built.

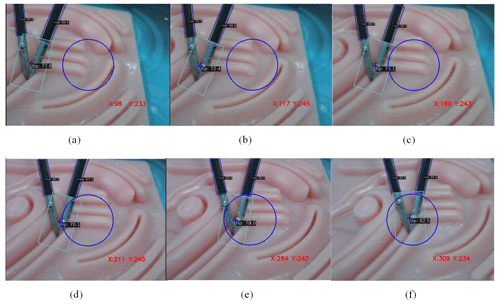

The experiment verifies that the motion control strategy proposed in this chapter can realize the control of the target surgical instrument to the center of the visual field under the guidance of the endoscopic image. As shown in Fig. 22, the central position of the end of the surgical instrument is gradually moved from both sides of the visual field to the center of the endoscopic visual field.

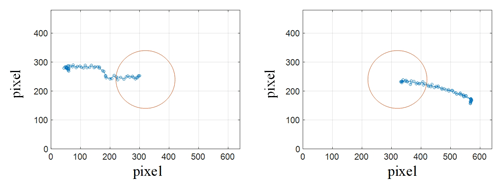

Meanwhile, in order to quantitatively describe the specific position of the central end position of the surgical instrument in the endoscopic visual field during the above visual field adjustment process, the coordinates of the central end position of the surgical instrument in the pixel coordinate system of the camera were recorded in real time in this section during the endoscopic autonomous tracking process, as shown in Fig. 23.

In Fig. 23, the available area of the endoscope's central field of vision is established with pixel coordinates (320, 240) as the center of the circle and 100 pixels as the radius, marked in red. As can be seen from Fig. 23, after adjusting the autonomous tracking strategy of the robotic arm, the central position of the end of the surgical instrument is in the central area of the endoscope field of view, which can provide doctors with a good surgical field of view and meet the surgical requirements.

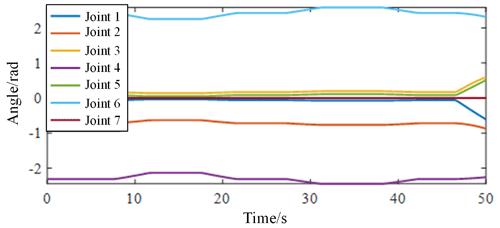

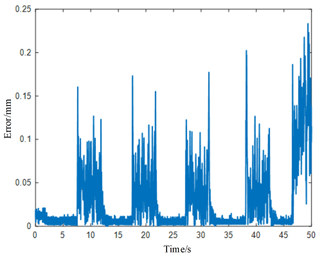

In order to further analyze the motion performance of the robot arm under the autonomous tracking control, this paper recorded the Angle data of each joint of the robot arm during the autonomous tracking process in real time and calculated the RCM error during the autonomous tracking process. As shown in Fig. 24, the data of each joint angle of the robotic arm change gently during autonomous tracking; the joint angle limitation is not exceeded, and there is no violent shaking phenomenon. As shown in Fig. 25, the RCM error during autonomous tracking is within 1 mm, which meets the requirements of real surgery and does not cause secondary trauma to the human body.

Through the analysis of the above experimental results, it can be seen that the data set established in this paper is convincing, and the deep learning object detection algorithm adopted can accurately and quickly complete the identification and positioning of surgical instruments. On this basis, the derived autonomous tracking control algorithm based on visual guidance can ensure that the center position of the surgical instrument is in the middle of the endoscopic field of view, and it can ensure a good RCM constraint effect during the autonomous tracking process.

The design of the flexible endoscopic robotic system is completed, and the key components of the flexible continuum joints are verified by finite element analysis and specific experiments to determine the optimal size. The constant curvature assumption method is used to model the kinematics of the 2-DOF flexible continuum, and the workspace of the flexible continuum is analyzed. Then, the kinematic calibration experiment is carried out on the continuum, and the kinematic model is calibrated by the method of drive compensation. The trajectory planning experiment was carried out with the calibrated kinematic model, and the functional verification of the flexible continuum was carried out to analyze the feasibility of its application in endoscopic surgery. Aiming at the RCM constraint problem during endoscopic surgery, the motion control algorithm of the flexible endoscopic robot under the RCM constraint was derived, and a set of circular trajectories with a radius of 40 mm was planned for its end, and the RCM error of the robotic arm in the process of motion, as well as the actual trajectory of the end of the continuum in the three-dimensional space, was obtained for the comparative analysis through the visual inspection system. Then a primary–secondary heterogeneous teleoperation control algorithm based on position increments was adopted to realize the precise control of the end of the flexible endoscopic robot by the method of proportional mapping. Finally, the RTMDet deep learning object detection algorithm was selected to identify and locate surgical instruments through comparative experiments, and the autonomous tracking control was completed based on visual guidance. In the process of autonomous tracking, the RCM error was less than 1 mm, which met the actual surgical requirements.

All the figures are original. All the data in this paper can be obtained by request from the corresponding author.

SQ designed the new mechanism. JZ and ZP developed the simulation models. JZ and ZW carried out the experiments and the experimental analysis. JZ and SQ wrote the paper. JZ, SQ and XS adjusted the format of the paper.

The contact author has declared that none of the authors has any competing interests.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

This research has been supported by the National Natural Science Foundation of China (grant nos. 52175013, U19A20101).

This paper was edited by Zi Bin and reviewed by three anonymous referees.

Aghakhani, N., Geravand, M., Shahriari, N., Vendittelli, M., and Oriolo, G.: Task control with remote center of motion constraint forminimally invasive robotic surgery, in: Proceedings of 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Karlsruhe, Germany, 17 October 2013, 1050–4729, https://doi.org/10.1109/ICRA.2013.6631412, 2013.

Bawa, V. S., Singh, G., Kaping, A. F., Leporini, A., and Landolfo, C.: ESAD: Endoscopic Surgeon Action Detection Dataset, cs.CV, arXiv:2006.07164, https://doi.org/10.48550/arXiv.2006.07164, 12 June 2020.

Bawa, V. S., Singh, G., KapingA, F., Oleari, E., Leporini, A., Landolfo, C., Zhao, P., Xiang, X., and Luo, G.: The SARAS Endoscopic Surgeon Action Detection (ESAD) dataset: Challenges and methods, cs.CV., arXiv:2104.03178, https://doi.org/10.48550/arXiv.2104.03178, 7 April 2021.

Berkelman, P. and Ma, J.: A compact modular teleoperated robotic system for laparoscopic surgery, Int. J. Robot. Res., 28, 1198–1215, https://doi.org/10.1177/0278364909104276, 2009.

Brahmbhatt, J. V., Gudeloglu, A., Liverneaux, P., and Oarekattil, S. J.: Robotic microsurgery optimization, APS, 41, 225–230, https://doi.org/10.5999/aps.2014.41.3.225, 2014.

Chen, M. M., Orosco, R. K., Lim, G. C., and Holsinger, F. C.: Improved transoral dissection of the tongue base with a next-generation robotic surgical system, The Laryngoscope, 128, 78–83, https://doi.org/10.1002/lary.26649, 2018.

Dai, Z. C., Wu, Z. H., Zhao, J. R., and Xu, K.: A robotic laparoscopic tool with enhanced capabilities and modular actuation, Sci. China Technol., 60, 569–572, https://doi.org/10.1007/s11431-018-9348-9, 2019.

Dhumane, P. W., Diana, M., Leroy, J., and Marescaux, J.: Minimally invasive single-site surgery for the digestive system: A technological review, J. Minim. Access. Surg., 7, 40–51, https://doi.org/10.4103/0972-9941.72381, 2011.

Ding, J., Goldman, R. E., Xu, K., Allen, P. K., Fowler, D. L., and Simaan, N.: Design and coordination kinematics of an insertable robotic effectors platform for single-port access surgery, IEEE/ASME Trans. Mechatronics., 18, 1612–1624, https://doi.org/10.1109/TMECH.2012.2209671, 2012.

Ejaz, A., Sachs, T., He, J., Spolverato, G., Hirose, K., Ahuja, N., Wolfgang, C. L., Makary, M. A., and Weiss, M.: A comparison of open and minimally invasive surgery for hepatic and pancreatic resections using the nationwide inpatient sample, J. Gastrointest. Surg., 156, 538–547, https://doi.org/10.1016/j.surg.2014.03.046, 2014.

Freschi, C., Ferrari, V., Melfi, F., Mosca, F., and Cuschieri, A.: Technical review of the da Vinci surgical telemanipulator, IJMRCAS, 9, 396–406, https://doi.org/10.1002/rcs.1468, 2013.

Gomes, P.: Reviewing the past, analysing the present, imagining the future, Robot Cim.-int. Manuf., 27, 261–266, https://doi.org/10.1016/j.rcim.2010.06.009, 2010.

Guthart, G. and Salisbury, J. K.: The IntuitiveTM telesurgery system: overview and application, in: Proceedings of IEEE International Conference on Robotic and Automation, Symposia Proceedings, San Francisco, CA, USA, 24–28 April 2000, 618–621, https://doi.org/10.1109/ROBOT.2000.844121, 2000.

Kanno, T., Haraguchi, D., Yamamoto, M., Tadano, K., and Kawashima, K.: A forceps manipulator with flexible 4-DOF mechanism for laparoscopic surgery, IEEE/ASME Trans. Mechatronics., 20, 1170–1178, https://doi.org/10.1109/TMECH.2014.2327223, 2014.

Li, Q., Wang, S., Yun, J., Liu, D., and Han, B.: Haptic device with gripping force feedback, in: Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005, 1190–1195, https://doi.org/10.1109/ROBOT.2005.1570277, 2005.

Liang, K., Xing, Y., Li, J., Wang, S., Li, A., and Li, J. H.: Motion control skill assessment based on kinematic analysis of robotic end-effector movements, IJMRCAS, 14, 1845, https://doi.org/10.1002/rcs.1845, 2018.

Lyu, C., Zhang, W., Huang, H., Zhou, Y., Wang, Y., Liu, Y., and Zhang, S.: RTMDet: An Empirical Study of Designing Real-Time Object Detectors, cs.CV, arXiv:2212.07784, 14 December 2022.

Sadeghian, H., Zokaei, F., and Hadian, J. S.: Constrained kinematic control in minimally invasive robotic surgery subject to remote center of motion constraint, J. Intell. Robot. Syst., 95, 901–913, https://doi.org/10.1007/s10846-018-0927-0, 2019.

Sandoval, J., Poisson, G., and Vieyres, P.: Improved dynamic formulationfor decoupled cartesian admittance control and RCM constraint, in: Proceedings of 2016 IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 9 June 2016, 16055557, 1124–1129, https://doi.org/10.1109/ICRA.2016.7487242, 2016.

Su, H., Li, J., and Kong, K.: Development and experiment of the Internet-based telesurgery with MicroHand robot, Adv. Mech. Eng., 10, 168781401876192, https://doi.org/10.1177/1687814018761921, 2018.

Sung, G. T. and Gill, I. S.: Robotic laparoscopic surgery: a comparison of the da Vinci and Zeus systems, Urol. J., 58, 893–898, https://doi.org/10.1016/S0090-4295(01)01423-6, 2001.

Tewari, A., Peabody, J., Sarle, R., Balakrishnan, G., Gemal, A., Shrivastava, A., and Menon, M.: Technique of da Vinci robot-assisted anatomic radical prostatectomy, Urol. J., 20, 569–572, https://doi.org/10.1016/S0090-4295(02)01852-6, 2013.

Wang, Y., Li, L., and Li, G.: An Analytical Solution for Inverse Kinematics of 7-DOF Redundant Manipulators with Offsets at Elbow and Wrist, in: Proceedings of 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference, Chongqing, Chongqing, China, 23 March 2022, 1096–1103, https://doi.org/10.1109/ITOEC53115.2022.9734617, 2022.

Wang, Z., Liu, G., Qian, S., Wang, D., Wei, X., and Yu, X.: Tracking control with external force self-sensing ability based on position/force estimators and non-linear hysteresis compensation for a backdrivable cable-pulley-driven surgical robotic manipulator, Mech. Mach. Theory, 183, 105259, https://doi.org/10.1016/j.mechmachtheory.2023.105259, 2023.

Wang, Z. Y., Bao, S. Y., Wang, D. M., and Qian, S.: Design of a Novel Flexible Robotic Laparoscope Using a Two Degrees-of-Freedom Cable-Driven Continuum Mechanism with Major Arc Notches, J. Mech. Robot., 15, 064502, https://doi.org/10.1115/1.4056502, 2023.

Xie, X., Cheng, G., Wang, J., Yao, X., and Han, J.: Oriented R-CNN for object detection, cs.CV, arXiv:2108.05699, 12 August 2021.

Yang, X., Yan, J., Feng, Z., and He, T.: R3det: Refined single-stage detector with feature refinement for rotating object, in: Proceedings of the AAAI conference on artificial intelligence, February 2021, 3163–3171, https://doi.org/10.1609/aaai.v35i4.16426, 2021.

Zenoni, S. A., Arnoletti, J. P., and Sg, D. L. F.: Recent developments in surgery: minimally invasive approaches for patients requiring pancreaticoduodenectomy, Jama Surg., 148, 1154–1157, https://doi.org/10.1001/jamasurg.2013.366, 2013.

Zhang, G., Liu, S., Yu, W., Wang, L., Liu, N., Li, F., and Hu, S.: Gasless laparoendoscopic single-site surgery with abdominal wall lift in general surgery: initial experience, Surg. Endosc., 25, 298–304, https://doi.org/10.1007/s00464-010-1177-9, 2011.

- Abstract

- Introduction

- Flexible endoscopic robot design

- Flexible endoscopic robot motion planning and teleoperation experiment

- Flexible endoscopic robot autonomous tracking experiment

- Conclusions

- Data availability

- Author contributions

- Competing interests

- Disclaimer

- Financial support

- Review statement

- References

- Abstract

- Introduction

- Flexible endoscopic robot design

- Flexible endoscopic robot motion planning and teleoperation experiment

- Flexible endoscopic robot autonomous tracking experiment

- Conclusions

- Data availability

- Author contributions

- Competing interests

- Disclaimer

- Financial support

- Review statement

- References